Introduction to Open Source in AI

The Rise of Open Source in AI: Key Projects to Watch in 2024: Open-source projects have become a cornerstone in the field of artificial intelligence (AI), playing a crucial role in democratizing AI development and fostering unprecedented levels of innovation. The growing prominence of these initiatives is not merely a trend but a significant shift in how AI technologies are developed and deployed. By making code, models, and datasets freely accessible, open-source projects have lowered the barriers to entry for researchers, developers, and organizations, enabling them to contribute to and benefit from cutting-edge advancements in AI.

The collaborative nature of open-source projects in AI has led to a vibrant ecosystem where diverse ideas and expertise converge. Large technology companies such as Google, Facebook, and Microsoft have been instrumental in nurturing these initiatives. These companies not only contribute substantial resources and expertise but also actively engage with the open-source community. This synergy between industry giants and the global community accelerates the pace of innovation, leading to rapid advancements and more robust, scalable AI solutions.

Furthermore, the open-source model fosters a spirit of transparency and accountability, which is vital in a field as impactful as AI. When the underlying algorithms and data are open for scrutiny, it ensures that AI systems are evaluated rigorously for biases, fairness, and ethical considerations. This openness helps in building trust among users and stakeholders, making the adoption of AI technologies more widespread and equitable.

Overall, the rise of open-source projects in AI signifies a pivotal moment in the technology’s evolution. It not only democratizes access to powerful AI tools and resources but also propels a collaborative approach to tackling some of the most pressing challenges in the field. As we look ahead to 2024, the role of open-source in shaping the future of AI cannot be overstated, promising a landscape rich with innovation, inclusivity, and shared progress.

Historical Context and Evolution

The journey of open-source software, tracing back to the early days of computing, has significantly influenced the tech industry. The collaborative nature of open-source projects fostered a culture where code was shared freely, leading to rapid innovation and extensive community engagement. One of the pioneering moments in the open-source movement was the creation of the GNU Project in 1983 by Richard Stallman, which laid the foundation for the development of free software. This momentum was further bolstered by the release of the Linux kernel by Linus Torvalds in 1991, a cornerstone event that galvanized the open-source community and showcased the potential of collective contributions.

Significant milestones in the realm of open-source AI began to surface prominently over the past decade. In 2007, the unveiling of Google’s TensorFlow marked a seminal moment, democratizing access to advanced machine learning tools. This was soon followed by contributions from other tech giants; Facebook released PyTorch in 2016, which gained traction for its dynamic computation graph and ease of use. These events signaled a paradigm shift, where the principles of open source began to permeate the AI research community, leading to a proliferation of innovative projects and frameworks.

The past decade has seen an incredible evolution in the open-source AI landscape. Various factors have fueled the rise of open-source AI projects. Firstly, the increased accessibility and affordability of computational resources have enabled more individuals and organizations to participate in and contribute to these projects. Secondly, the transparency and shared knowledge inherent in open-source platforms have fostered reproducibility and accelerated advancements in AI research. Lastly, the collaborative ethos of open source has driven the democratization of AI technologies, making them accessible to a broader audience and facilitating a diverse range of applications across industries.

As we move towards 2024, the significant advances in open-source AI projects continue to elucidate the profound impact of collective intelligence and shared innovation, shaping the technological landscape and offering promising prospects for the future.

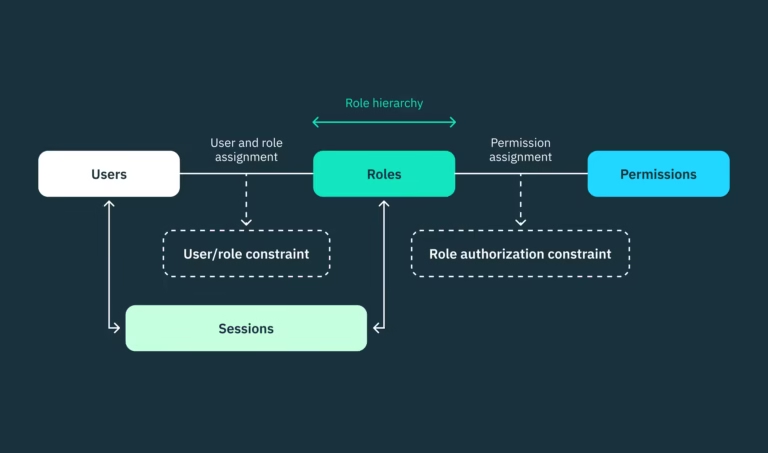

Top Open Source AI Frameworks

Open-source AI frameworks have played a pivotal role in democratizing artificial intelligence, empowering researchers, developers, and organizations to harness the potential of AI technologies. Among these frameworks, a few stand out due to their profound impact, robust community support, and continuous evolution. Here, we delve into the most influential open-source AI frameworks, shedding light on their unique features and potential advancements to watch for in 2024.

TensorFlow: Developed by the Google Brain team, TensorFlow has become a cornerstone in the AI community, offering comprehensive tools for building machine learning models. Its extensive library supports both high-level APIs for ease of use and low-level APIs for more granular control. TensorFlow’s vast community and synergy with Google’s ecosystem make it a prime candidate for continuous innovation, with upcoming improvements likely to focus on achieving even greater scalability and performance.

Key Features of TensorFlow:

- Versatility: TensorFlow supports various machine learning tasks, including image and speech recognition, natural language processing, and predictive analytics. It can be used for both research and production environments.

- Scalability: TensorFlow is designed to run on various platforms, from mobile devices to large-scale distributed systems. It supports CPUs, GPUs, and TPUs (Tensor Processing Units), making it scalable for different computational needs.

- Flexible Architecture: TensorFlow allows users to create computational graphs, which define the flow of data through a series of operations. This graph-based approach makes it easy to visualize, debug, and optimize models.

- Ecosystem: TensorFlow has a rich ecosystem of tools and libraries, such as TensorFlow Lite for mobile and embedded devices, TensorFlow Extended (TFX) for production ML pipelines, and TensorFlow Hub for reusable machine learning modules.

- Community Support: As one of the most popular AI frameworks, TensorFlow benefits from a large and active community, extensive documentation, and numerous tutorials and courses available online.

Applications:

- Deep Learning: TensorFlow is particularly known for its deep learning capabilities, enabling the creation of neural networks for tasks like image classification, object detection, and generative models.

- Research and Development: Researchers use TensorFlow to experiment with new machine learning algorithms and architectures, thanks to its flexibility and support for custom operations.

- Industry Adoption: TensorFlow is used by major companies in various industries, including healthcare, finance, and technology, for tasks such as recommendation systems, fraud detection, and autonomous vehicles.

In summary, TensorFlow is a powerful and flexible framework that has become a cornerstone in the development and deployment of AI and machine learning solutions.

PyTorch: Originating from Facebook’s AI Research lab, PyTorch has rapidly gained traction due to its dynamic computation graph and intuitive interface. PyTorch is particularly beloved in the research community for its flexibility and ease of debugging, making it an indispensable tool for experimental and production projects alike. The emergence of PyTorch Lightning and the integration of new hardware acceleration techniques signal exciting developments anticipated in 2024.

Key Features of PyTorch:

- Dynamic Computational Graphs: Unlike some other frameworks, PyTorch builds computational graphs dynamically, allowing for more flexibility and ease when modifying and debugging models. This makes it particularly well-suited for research and experimentation.

- Pythonic Nature: PyTorch is designed to be intuitive and easy to use, integrating seamlessly with the Python programming language. Its syntax and functions feel natural for Python developers, reducing the learning curve.

- Tensor Operations and Autograd: PyTorch provides powerful tools for tensor computation (similar to NumPy) with strong GPU acceleration. The built-in autograd system automatically calculates gradients, simplifying the process of backpropagation in neural networks.

- Strong Community and Ecosystem: PyTorch has a rapidly growing community and a rich ecosystem of libraries and tools. Notable extensions include PyTorch Lightning for high-level model organization and fastai for easy-to-use training pipelines.

- Support for Research and Production: PyTorch is favored in the research community for its flexibility, but it also has strong tools for production deployment. TorchServe, for instance, is a scalable model-serving library specifically designed for PyTorch models.

Applications:

- Deep Learning Research: PyTorch is the framework of choice for many researchers due to its flexibility and support for complex, custom neural network architectures. It is commonly used in fields like computer vision, natural language processing, and reinforcement learning.

- Prototyping and Experimentation: The dynamic nature of PyTorch makes it ideal for quickly prototyping new ideas and testing hypotheses. Researchers can iterate rapidly and adjust their models on the fly.

- Industry Adoption: PyTorch is increasingly being adopted by industry leaders for tasks such as image and video analysis, recommendation systems, and language modeling. Companies like Facebook, Tesla, and Microsoft use PyTorch in their AI projects.

Community and Development:

- Active Development: PyTorch is under continuous development, with regular updates that introduce new features and improvements. The framework is backed by Facebook and supported by contributions from the open-source community.

- Educational Resources: PyTorch has extensive documentation and a wealth of tutorials, making it accessible to both beginners and experienced developers. It is also widely used in academia for teaching machine learning and AI concepts.

In summary, PyTorch is a versatile and user-friendly machine learning framework that is particularly popular in the research community. Its dynamic graph approach, Pythonic design, and strong support for experimentation make it a powerful tool for developing and deploying advanced AI models.

Apache MXNet: As an incubating project under the Apache Software Foundation, MXNet is noted for its efficiency, scalability, and support for multiple programming languages. Its design is optimized for both symbol and imperative programming, which caters to diverse development needs. With Amazon Web Services heavily investing in its ecosystem, MXNet is poised for enhancements that will likely focus on cloud integration and optimization for emerging AI hardware.

Key Features of Apache MXNet:

- Hybrid Programming Model: MXNet supports both imperative (dynamic) and symbolic (static) programming. This allows developers to easily switch between building models with dynamic computation graphs for flexibility and static graphs for efficiency and optimization.

- Scalability: MXNet is highly scalable, capable of running on multiple GPUs and distributed computing environments. It is optimized for performance, enabling efficient use of computational resources in both cloud and on-premises setups.

- Multi-Language Support: MXNet supports several programming languages, including Python, Scala, R, Julia, and C++. This makes it accessible to a wide range of developers and allows for easy integration into existing software ecosystems.

- Flexible and Modular Design: The framework provides a modular approach to building neural networks, with components that can be easily customized and extended. This flexibility is particularly useful for researchers who need to experiment with new model architectures.

- Automatic Differentiation: MXNet includes an advanced autograd feature for automatic differentiation, which simplifies the process of calculating gradients during backpropagation. This feature supports complex operations and is essential for training deep learning models.

- Gluon API: The Gluon API in MXNet provides a high-level interface that makes it easier to build, train, and deploy deep learning models. Gluon combines the flexibility of imperative programming with the speed and performance of symbolic programming, making it an ideal choice for both beginners and experienced developers.

Applications:

- Deep Learning Models: MXNet is used to build and deploy a wide range of deep learning models, including convolutional neural networks (CNNs) for image recognition, recurrent neural networks (RNNs) for sequence modeling, and reinforcement learning algorithms.

- Industry Adoption: MXNet is employed by companies and organizations for various applications, such as natural language processing, computer vision, and time-series forecasting. Its scalability makes it suitable for large-scale industrial applications.

- Cloud Integration: MXNet is integrated with major cloud platforms, such as Amazon Web Services (AWS), which offers managed services like Amazon SageMaker for training and deploying MXNet models. This cloud integration simplifies the process of scaling AI workloads.

Community and Development:

- Active Development: MXNet is actively maintained by the Apache Software Foundation, with contributions from a global community of developers and researchers. Regular updates ensure that the framework remains up-to-date with the latest advancements in deep learning.

- Educational Resources: The MXNet community provides extensive documentation, tutorials, and example projects. This support makes it easier for developers to learn and start using the framework, whether they are new to deep learning or experienced practitioners.

Summary:

Apache MXNet is a powerful and versatile deep learning framework that offers a balance between flexibility and performance. Its hybrid programming model, scalability, multi-language support, and integration with cloud platforms make it a strong choice for both research and large-scale industrial applications. Whether you’re building cutting-edge AI models or deploying production systems, MXNet provides the tools and capabilities needed to succeed.

Scikit-learn: Known for bringing machine learning to the masses, Scikit-learn excels in simplicity and accessibility. It’s particularly favored for data mining and data analysis tasks. Built on NumPy, SciPy, and matplotlib, Scikit-learn’s extensive suite of machine learning algorithms makes it the go-to framework for traditional machine learning applications. As the AI landscape evolves, expect Scikit-learn to introduce novel algorithms and enhancements in data preprocessing and model evaluation techniques.

Key Features of Scikit-learn:

- Wide Range of Algorithms: Scikit-learn includes a comprehensive collection of supervised and unsupervised learning algorithms. This includes classification, regression, clustering, dimensionality reduction, and ensemble methods. Popular algorithms like Support Vector Machines (SVM), Random Forest, k-Nearest Neighbors (k-NN), and k-Means are readily available.

- User-Friendly API: The library is designed with simplicity in mind, offering a consistent and easy-to-understand API. This makes it accessible to both beginners and experienced data scientists, allowing for quick experimentation and model development.

- Preprocessing Tools: Scikit-learn provides various tools for data preprocessing, including techniques for scaling, normalization, encoding categorical variables, feature extraction, and feature selection. These tools help in preparing datasets for machine learning models.

- Model Evaluation and Selection: The library includes robust utilities for model evaluation, such as cross-validation, grid search, and various metrics for assessing model performance. These features help in selecting the best model and tuning hyperparameters effectively.

- Integration with Python Ecosystem: Scikit-learn integrates seamlessly with other Python libraries like Pandas and Matplotlib, allowing users to easily handle data and visualize results. This integration makes it a powerful tool within the broader Python data science ecosystem.

- Scalability and Performance: While Scikit-learn is not designed for large-scale distributed computing, it is optimized for performance on single machines. It can efficiently handle moderate-sized datasets and provides options for parallel processing.

- Extensibility: Scikit-learn is designed to be easily extensible. Developers can integrate custom models and preprocessing steps with the existing pipeline framework, allowing for flexibility and customization according to specific needs.

Applications:

- Classification and Regression: Scikit-learn is commonly used for building models that predict categorical labels (classification) or continuous values (regression). It’s suitable for a wide range of applications, from spam detection to predicting housing prices.

- Clustering and Dimensionality Reduction: The library provides tools for clustering data (e.g., k-Means, DBSCAN) and reducing the dimensionality of datasets (e.g., Principal Component Analysis). These techniques are valuable for exploratory data analysis and visualization.

- Pipeline Automation: Scikit-learn’s pipeline feature allows users to automate the process of applying a sequence of transformations and then fitting a model. This is particularly useful for managing complex workflows and ensuring that the same preprocessing steps are applied during both training and prediction.

- Educational Use: Due to its simplicity and well-documented nature, Scikit-learn is widely used in academic settings to teach machine learning concepts. It provides a hands-on experience with algorithms and techniques without the complexity of more advanced frameworks.

Community and Development:

- Active Community: Scikit-learn has a large and active user base, contributing to continuous improvement and the addition of new features. The community-driven development ensures that the library remains up-to-date with the latest advancements in machine learning.

- Extensive Documentation: The library comes with comprehensive documentation, including user guides, API references, and examples. This makes it easier for new users to learn and apply machine learning techniques effectively.

Summary:

Scikit-learn is a powerful, easy-to-use machine learning library that is ideal for building and deploying machine learning models on moderate-sized datasets. Its wide range of algorithms, tools for preprocessing and model evaluation, and integration with the Python ecosystem make it a go-to choice for data scientists and developers. Whether you’re conducting exploratory data analysis, building predictive models, or teaching machine learning concepts, Scikit-learn provides the tools you need to succeed.

The rise of these open-source AI frameworks underscores a collaborative and progressive approach in the AI sphere. With significant updates and feature expansions on the horizon, 2024 promises to be a pivotal year in bolstering the capabilities and reach of these influential frameworks.

Promising Open Source AI Tools and Libraries

As the adoption of AI continues to expand, several open-source tools and libraries have emerged as vital resources for developers, researchers, and organizations. Among these, Hugging Face’s Transformers, OpenCV, and OpenAI’s Gym have gained significant traction within the community due to their robust capabilities and ease of integration.

Hugging Face’s Transformers library has become a cornerstone in natural language processing (NLP) tasks, offering a wide range of pre-trained models for tasks such as text classification, machine translation, and text generation. Its versatility and comprehensive documentation make it a go-to choice for both beginners and experienced practitioners. Recent updates have introduced more efficient model architectures and integration with other popular frameworks, further solidifying its position in the AI toolkit.

OpenCV, another essential library, focuses on computer vision applications. It provides a wide array of tools for image processing, object detection, and facial recognition, among others. Recent developments in OpenCV have enhanced its capabilities to handle real-time processing more efficiently. The library’s extensive functionality and strong community support make it indispensable for projects requiring robust visual data analysis.

OpenAI’s Gym, a toolkit for developing and comparing reinforcement learning algorithms, offers a variety of simulated environments to test and refine these algorithms. It serves as an invaluable resource for researchers aiming to push the boundaries of AI by providing standardization and benchmarks for evaluation. With continuous updates and an active community, Gym remains a fundamental component in the reinforcement learning landscape.

Looking ahead to 2024, several new open-source tools are emerging with significant potential. For instance, JAX, developed by Google, is rapidly gaining attention for its high-performance machine learning abilities. By enabling optimized and scalable computations, JAX simplifies the development of complex models and is poised to become a key player in the AI ecosystem.

Furthermore, the rise of collaborative platforms like TensorFlow Extended (TFX) is notable. This end-to-end platform for deploying robust machine learning pipelines ensures that models developed in research labs can seamlessly transition to production environments. As AI continues to evolve, the synergy of these open-source tools will undoubtedly catalyze innovation and accessibility in the field.

Open Source AI in the Industry

As technology demand continues to surge, the adoption of open-source AI projects is making a significant impact across numerous industries, from healthcare and finance to automotive. These industries are using open-source AI to drive innovation, improve efficiencies, and solve complex problems that were previously insurmountable. One vivid example is the healthcare sector, where open-source algorithms are being leveraged for predictive analytics, early diagnosis of diseases, and personalized treatment plans.

Take, for instance, the utilization of TensorFlow in developing predictive models that can detect diseases like diabetes and cancer in their nascent stages. Hospitals and research institutions are increasingly adopting such open-source tools to analyze vast datasets and identify patterns that could escape the human eye. This technology is not only enhancing diagnostic accuracy but also optimizing resources by predicting patient needs proactively.

In the finance industry, open-source AI projects like QuantLib are being employed to analyze market trends, manage risks, and even detect fraudulent activities. Financial institutions are integrating these tools to create more robust risk management frameworks and enhance their trading strategies. By leveraging the collective efforts of a global developer community, these institutions are achieving unparalleled innovation and efficiency.

The automotive industry is also witnessing a radical transformation with open-source AI. Companies such as Tesla and Waymo are integrating open-source AI frameworks like OpenAI into their autonomous driving systems. These frameworks enable vehicles to learn and adapt to various driving conditions, making autonomous driving safer and more reliable. The continuous advancements in sensor technology and machine learning algorithms are setting the stage for a future where self-driving cars could become a mainstream reality by 2024.

Overall, the trend of adopting open-source AI in various industries is anticipated to accelerate in 2024. Companies are increasingly recognizing the value of collaborative innovation, which not only speeds up development cycles but also ensures more transparent, reliable, and customizable AI solutions. Such widespread adoption of open-source AI is poised to revolutionize multiple sectors, pushing the boundaries of what technology can achieve.

Challenges and Considerations

Open-source AI projects represent a significant shift in the landscape of artificial intelligence, fostering innovation and collaboration. However, these ventures come with a unique set of challenges that must be acknowledged and addressed to ensure their long-term success and responsible development. One of the foremost issues revolves around funding. Open-source projects often rely on community contributions, grants, or sponsorships to sustain themselves. This pooled funding mechanism can be unpredictable, leading to concerns about project continuity and resource allocation. Companies and individuals must strategize long-term funding solutions, including diversified income streams and strategic partnerships, to ameliorate these uncertainties.

Security is another critical challenge in the realm of open-source AI. With source code accessible to anyone, there’s a potential risk of vulnerabilities being exploited maliciously. Developing robust security protocols and engaging in regular audits can mitigate these threats. Additionally, fostering a culture of transparency and rigorous peer review within the community can help identify and address security issues promptly.

Maintaining community engagement is also vital for the thriving of open-source AI projects. Without active participation from contributors, both in terms of code and discourse, the quality and innovation within these projects can stagnate. Tailoring communication strategies to attract and retain a diverse set of contributors, recognizing their efforts, and providing clear pathways for collaboration can enhance community involvement.

Ethical considerations form another layer of complexity. Open-source AI projects must take into account the ethical implications of their work. Ensuring responsible AI development involves making conscious efforts to avoid biases, uphold privacy standards, and prevent misuse of AI technologies. Establishing ethical guidelines and having dedicated oversight bodies can steer projects towards more conscientious and socially beneficial outcomes.

Overcoming these challenges requires concerted efforts from all stakeholders involved in open-source AI. By addressing funding concerns, enhancing security measures, fostering community engagement, and upholding ethical standards, open-source AI can continue to thrive and contribute positively to the technological ecosystem.

The Role of the Community and Collaboration

Community involvement and collaboration play a crucial role in the success of open-source AI projects. The collective effort of individuals from diverse backgrounds fosters an environment of innovation and shared knowledge that propels these projects forward. Open-source AI communities often organize various initiatives, such as hackathons and collaborative platforms, to stimulate creative problem-solving and accelerate development.

Hackathons, for instance, provide a fertile ground for AI enthusiasts and professionals to collaborate intensively on projects within a short time frame. These events not only bring together developers, researchers, and domain experts but also cultivate an atmosphere of innovation and learning. Projects like TensorFlow and PyTorch have greatly benefited from such community-driven efforts, where new features and improvements are routinely integrated from hackathon contributions.

Collaborative platforms, including GitHub and GitLab, further enable the seamless sharing of code, ideas, and resources. These platforms are essential for coordinating large-scale contributions and ensuring that projects remain dynamic and adaptive. Many open-source AI projects also leverage forums and social media channels to maintain active communication with their user base, empowering developers to share insights and troubleshoot collectively.

Notable contributors to the open-source AI landscape include influential researchers and organizations, such as OpenAI, which has historically embraced community contributions and open collaboration. These entities set a precedent that encourages more participants to contribute, thereby enriching the ecosystem. Additionally, documentation and tutorials created by community members help onboard new users and lower the barriers to entry, fostering an inclusive and expanding community.

Individuals interested in getting involved can start small by participating in forums and contributing to documentation. More experienced developers can tackle open issues, contribute code, and even lead projects. Whether through small yet significant contributions or larger-scale developments, community involvement and collaboration are the bedrock upon which the success of open-source AI projects rests.

Future Trends and Predictions

As we look to the horizon of open-source AI, several emerging trends and technologies seem poised to redefine the landscape. Continuing the momentum, advancements in AI ethics stand out. Robust ethical frameworks are expected to become standard in open-source AI projects, ensuring transparency and fairness. These frameworks will likely focus on bias mitigation, data privacy, and ensuring that AI systems align with societal values.

Another potential game-changer is the evolution of autonomous systems. Enhanced by open-source contributions, these systems might reach unprecedented levels of sophistication and reliability. From self-driving cars to autonomous drones, the integration and improvement of AI algorithms within open-source platforms could significantly accelerate innovation. Open-source collaborative environments will enable researchers and developers to share, tweak, and optimize autonomous solutions, driving forward their practical deployment.

The integration of open-source AI with other emerging technologies is another intriguing trend. Blockchain, for instance, could offer secure, transparent data sharing for AI models. The convergence of these technologies could lead to novel applications, such as decentralized AI networks, providing scalable and trustable AI services across various sectors.

Additionally, we foresee a growing synergy between open-source AI and edge computing. As the need for real-time data processing becomes more critical, deploying AI models on edge devices will gain traction. This trend promises to minimize latency and reduce reliance on centralized cloud servers, making AI applications faster and more responsive.

Looking forward, the long-term impact of open-source AI on the industry seems substantial. With lower entry barriers, more organizations, including startups and academic institutions, will participate in AI development. This democratization of AI can lead to greater innovation and accelerate the adoption of AI across diverse fields, from healthcare to education.

Ultimately, the rise of open-source AI heralds an era where collaboration and transparency can drive technological breakthroughs, making AI more accessible and ethically sound. The next few years promise exciting developments, marking a pivotal period for the evolution of open-source AI.