Introduction to Deepfakes

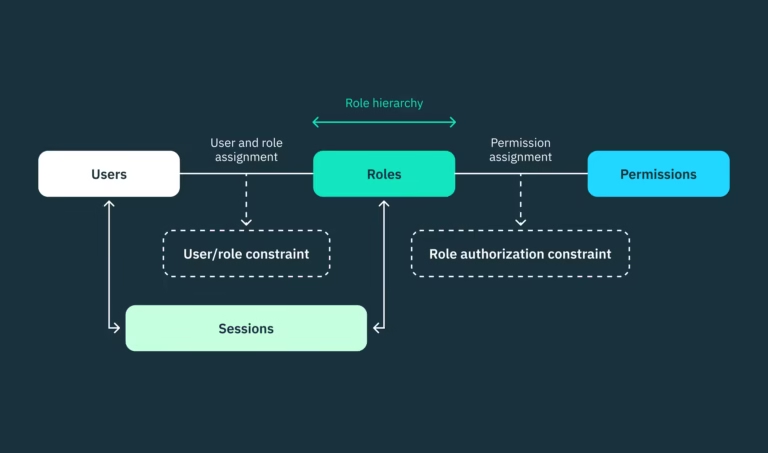

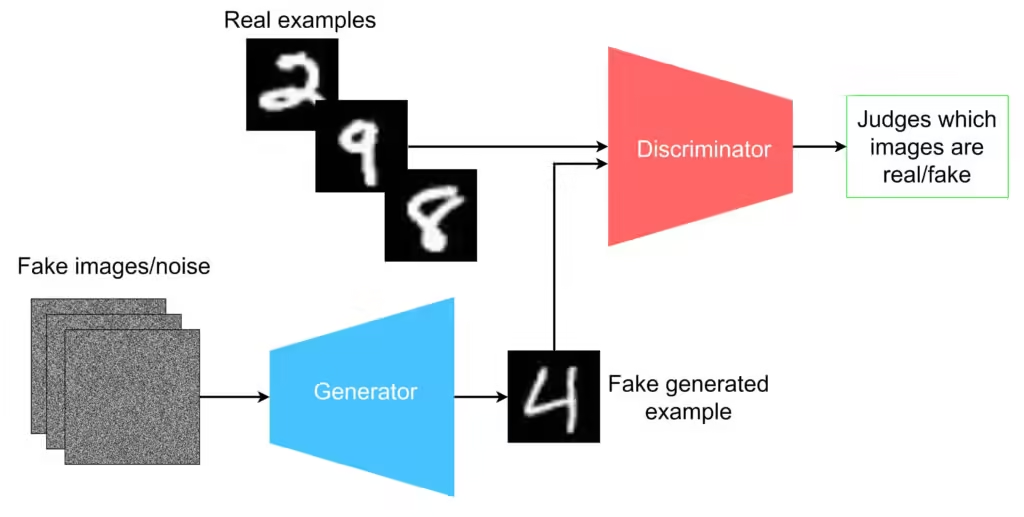

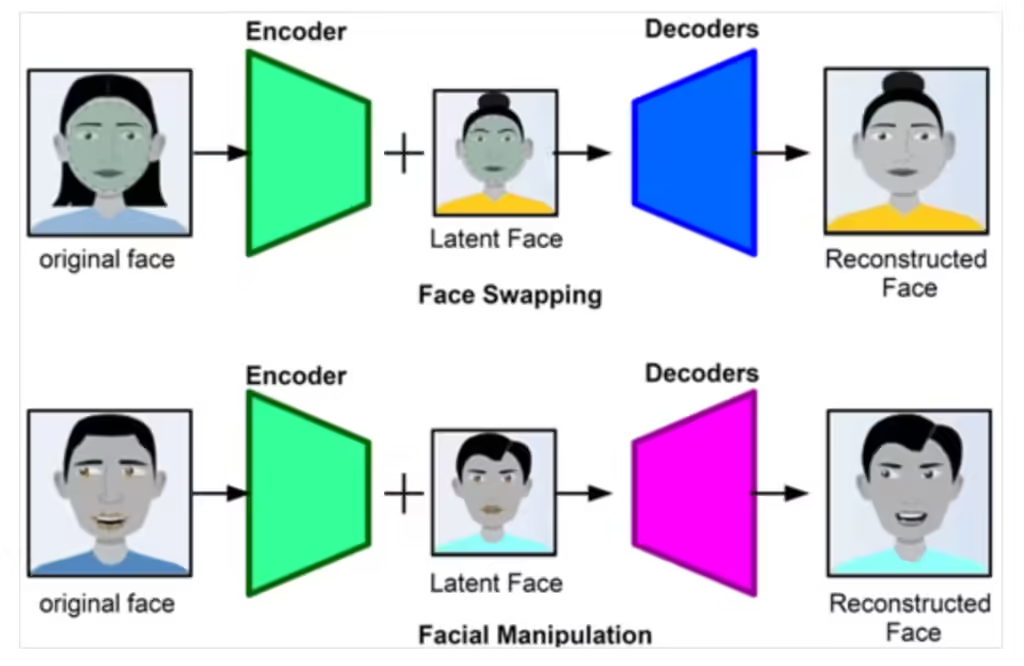

Deepfakes represent a significant advancement in the realm of artificial intelligence, employing sophisticated technology that allows for the creation of highly realistic fake media. At their core, deepfakes utilize deep learning, a subset of machine learning, to generate convincing representations of people and events. This is primarily accomplished through a specific type of neural network known as Generative Adversarial Networks (GANs). GANs function by pitting two neural networks against each other: the generator, which creates synthetic data, and the discriminator, which evaluates that data’s authenticity. This adversarial process continues until the generator produces output indistinguishable from real images or videos.

The rapid evolution of deepfake technology has made it increasingly accessible to the general public. Users can now generate deepfake content with relatively little expertise, thanks to user-friendly software and online platforms. Consequently, this democratization of advanced technology raises pressing concerns about its potential misuse. While the capabilities of deepfakes can be harnessed for creative and entertainment purposes, their ability to manipulate reality presents considerable risks in various domains, particularly in the cyber threat landscape.

Deepfakes have the potential to deceive, misinform, and disrupt trust in the media. They can be manipulated to create scenarios that never occurred, putting individuals’ reputations at stake and even impacting public trust in legitimate information sources. As these tools evolve, the implications of deepfakes expand, posing challenges not only for personal privacy but also for national security. With the proliferation of such technology, understanding deepfakes, their creation, and their possible ramifications has never been more crucial in safeguarding against the evolving cyber threats they represent.

The Evolution of Cyber Threats

The landscape of cyber threats has undergone significant transformation since the inception of the internet. Initially, cyber threats primarily consisted of basic malware and viruses designed to disrupt systems or steal basic information. As technology evolved, so did the strategies employed by malicious actors. The rise of traditional hacking methods, such as phishing and denial-of-service attacks, marked a shift in the focus of cybercriminals, leading them to exploit vulnerabilities within systems for financial gain or data theft.

With the advancement of artificial intelligence (AI), the threat landscape has entered a new era. Cyber attackers have harnessed the power of AI technologies, leading to increasingly sophisticated methods of infiltration and deception. The introduction of techniques like machine learning has allowed cybercriminals to analyze user behavior and craft targeted attacks, rendering traditional protection methods less effective. Such development paved the way for the emergence of deepfakes, a revolutionary yet alarming application of AI.

Deepfakes utilize AI-generated images and audio to fabricate realistic but fabricated content. This technology poses unique challenges for cybersecurity as it allows malicious actors to impersonate individuals convincingly, manipulate media, and create false narratives. For instance, deepfake technology can be leveraged for disinformation campaigns, where political, financial, or social narratives are distorted using doctored videos or audio clips. This not only endangers individuals’ reputations but can also have far-reaching implications for organizations and public trust.

Moreover, the accessibility of tools for creating deepfakes has democratized the ability to produce convincing fraudulent media, enabling even novice cybercriminals to participate in these illicit activities. This evolution signifies not only an increase in the volume of cyber threats but also a drastic change in their complexity and reach, necessitating a reevaluation of existing cybersecurity measures to counter this formidable challenge.

Deepfakes in Misinformation Campaigns

The emergence of deepfake technology heralds a new era of misinformation and disinformation campaigns that pose significant threats to society. Deepfakes, which are hyper-realistic synthetic media created using artificial intelligence, have been utilized in various high-profile cases to deceive the public and manipulate perceptions. One notable instance occurred during the 2020 U.S. presidential election when a deepfake video falsely portrayed a candidate making inflammatory statements. The dissemination of this fabricated content contributed to public confusion and raised concerns about the integrity of democratic processes.

Another striking example involves the use of deepfakes in the manipulation of political speeches and debates. By altering video footage, malicious actors can create misleading narratives that distort a public figure’s stance on critical issues. Such alterations not only misinform viewers but also foster distrust in legitimate media sources, as audiences struggle to discern between genuine reporting and distorted representations. This erosion of trust can have profound implications, hindering informed public discourse and undermining electoral integrity.

Moreover, deepfakes are not limited to political arenas; they have infiltrated social spheres, where they can potentially harm reputations and spread falsehoods about individuals. For instance, instances of deepfake pornography have emerged, causing distress to victims and raising serious ethical questions. The misuse of deepfake technology in any context highlights the urgent need for comprehensive regulation and the establishment of robust authentication methods to combat the proliferation of deceptive media.

As deepfake technology continues to evolve, it becomes increasingly essential for individuals and organizations to remain vigilant against misinformation campaigns. Educating the public on the characteristics and dangers of deepfake content is crucial to cultivating a more discerning audience capable of questioning the veracity of media presentations. The far-reaching consequences of deepfakes demand a collective effort to safeguard the integrity of information in the digital age.

Deepfake Technology in Cyber Threats

The use of deepfakes as a cyber threat isn’t just hypothetical; it has already been applied in numerous documented incidents, revealing the serious impact it can have on individuals, companies, and governments.

a. Social Engineering Attacks

Social engineering is a type of attack in which cybercriminals exploit human psychology to gain unauthorized access to sensitive information. Deepfakes elevate these attacks by introducing highly believable visual and auditory deception. For instance, a deepfake video or audio clip could impersonate a CEO, instructing employees to transfer funds or disclose confidential data.

Case Example

In 2019, a UK-based energy company experienced one of the earliest cases of a deepfake cyberattack. Cybercriminals used an AI-generated voice that mimicked the CEO to call an employee and instruct them to transfer €220,000 to a foreign account, resulting in substantial financial losses.

b. Phishing and Spear-Phishing

Deepfakes add a powerful layer to phishing scams by introducing convincing audio or video into email campaigns. Spear-phishing, a targeted form of phishing, becomes even more effective with personalized deepfake messages that can convincingly mimic trusted individuals, such as family members, executives, or colleagues. By manipulating media in this way, hackers can increase the success rate of their scams significantly.

c. Disinformation Campaigns

Another alarming application of deepfakes in cyber threats is disinformation. Cybercriminals can use deepfake technology to spread false narratives and misleading information, especially on social media platforms. Manipulated videos of public figures can spark panic, influence elections, and manipulate stock prices, causing real-world damage to society, economies, and democratic institutions. For example, a deepfake video of a prominent politician can potentially mislead citizens, affecting public opinion and voter behavior.

Why Deepfakes Are Hard to Detect

One of the reasons deepfakes pose such a serious threat is that they are challenging to detect, particularly as technology advances. GANs enable deepfakes to reach near-photorealistic quality, making it nearly impossible for the untrained eye to distinguish real from fake. Some of the main challenges in deepfake detection include:

- High-Resolution Fabrications: Advanced GANs produce deepfakes in high resolution, making subtle imperfections less noticeable.

- Constantly Improving Algorithms: Deepfake technology is evolving at a rapid pace, with new techniques overcoming previous limitations.

- Availability of Deepfake Tools: Numerous deepfake creation tools are available online, making it easy for people with limited technical skills to produce convincing content.

The Psychological and Economic Impact of Deepfakes

a. Undermining Trust

Deepfakes can erode trust in digital media and information. If people cannot differentiate between real and fake media, trust in news sources, public officials, and even personal interactions is compromised. This erosion of trust can lead to skepticism, making it difficult for individuals to distinguish authentic information from fabricated content.

b. Financial Loss

The financial consequences of deepfake cyber threats are substantial. Companies face increased risk as deepfake-enabled scams like Business Email Compromise (BEC) and fraudulent transactions target them directly. Insurance claims and legal battles surrounding deepfake fraud and defamation can also lead to significant financial burdens on companies and individuals.

c. Psychological Harm and Privacy Invasion

The personal use of deepfake technology can have severe psychological consequences. For instance, deepfakes have been used to create non-consensual pornographic material involving real individuals, which can lead to emotional trauma and reputation damage. This has raised serious privacy concerns and demands stronger regulatory actions against the misuse of deepfakes.

Examples of Deepfake Cyber Threats

a. Political Manipulation

Deepfakes have been deployed to manipulate political sentiment, especially around election seasons. For instance, a fabricated video of a politician making incendiary remarks can quickly spread online, potentially swaying public opinion. This type of manipulation threatens democratic institutions and undermines fair elections.

b. Corporate Espionage and Financial Fraud

Deepfake technology is used to impersonate corporate executives in order to conduct high-stakes fraud. Voice-based deepfakes, as mentioned in the 2019 UK incident, can convince employees to make unauthorized financial transactions or disclose sensitive company information.

c. Targeting Personal Identities

Individuals are often victimized by deepfake technology, especially public figures who are susceptible to having their images or voices manipulated for defamatory or blackmail purposes. Deepfakes have also been used in cyberbullying, where fabricated videos can damage reputations, cause psychological harm, and lead to social ostracism.

Defending Against Deepfake Cyber Threats

As deepfake threats grow, various defense strategies are being explored to mitigate their impact:

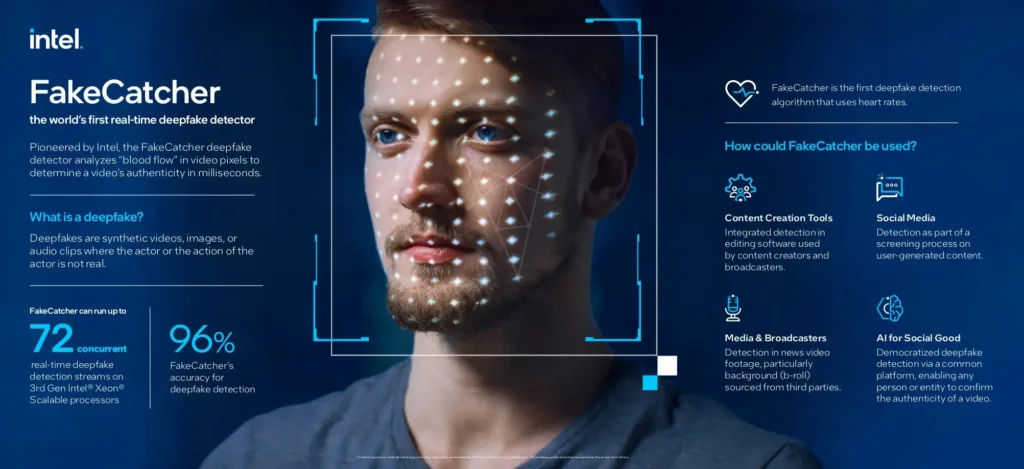

a. Deepfake Detection Technologies

Several companies and research institutions are developing AI tools to identify deepfakes. These tools analyze the artifacts and inconsistencies that deepfake media often exhibit, such as unnatural blinking or subtle facial irregularities. However, these technologies face a constant challenge in keeping up with the evolution of deepfake techniques.

b. Blockchain for Verification

Blockchain technology has emerged as a potential solution for authenticating the origin of digital media. By embedding digital signatures in media files, blockchain enables users to verify the authenticity of content. Blockchain-based verification has significant potential in journalism, government communications, and other sectors vulnerable to deepfake misinformation.

c. Regulatory Frameworks

Governments around the world are recognizing the need for regulation to address the misuse of deepfake technology. Countries like the U.S. and China have introduced laws penalizing the use of deepfakes for malicious purposes, while the EU’s Digital Services Act aims to curb the spread of disinformation.

d. Cybersecurity Awareness Training

Educating the public and employees about the dangers of deepfake technology is crucial in building a defense. Companies can implement cybersecurity training that teaches employees to recognize deepfake threats and follow protocols when responding to suspicious media or communications.

e. Media Literacy Programs

Media literacy programs help individuals understand how to critically evaluate information sources and recognize potential misinformation, including deepfakes. By increasing awareness, these programs reduce the likelihood that individuals will fall victim to deepfake-enabled scams or disinformation.

Future Outlook: AI Arms Race in Deepfake Technology

The battle between deepfake creators and cybersecurity professionals has become a virtual arms race. As detection technology improves, so too does the sophistication of deepfake generation techniques. This cycle is expected to continue, driven by advances in both deepfake creation and AI-powered detection.

a. Development of Counter-Deepfake AI

Future defenses may rely on counter-deepfake AI, where AI systems are specifically trained to detect and counteract deepfake attempts. These systems could be deployed in social media networks, newsrooms, and financial institutions to prevent the spread of false information and unauthorized access to sensitive data.

b. Role of Collaboration in Cybersecurity

A collaborative approach between tech companies, governments, and researchers is essential in addressing deepfake threats. By sharing resources, expertise, and data, stakeholders can create more robust solutions and establish global standards for identifying and mitigating deepfake cyber threats.

c. Emerging Ethical Considerations

As deepfake technology advances, ethical discussions around its use and implications for privacy and personal identity will likely intensify. Society will need to navigate the fine line between harnessing AI’s creative potential and preventing its misuse in deceptive and harmful ways.

Future Trends in Deepfake Technology

As artificial intelligence (AI) continues to advance, the technology behind deepfakes is likely to evolve significantly. The creation of deepfakes is becoming increasingly sophisticated, enabling the production of more convincing and realistic fake media. Future advancements could include enhanced algorithms that not only facilitate the seamless rendering of images and videos but also automate the fabrication process. With the potential for publicly available AI tools, we may witness an escalation in the accessibility and use of deepfake technology, raising new concerns about misinformation and deception.

On the detection front, researchers are focusing on developing more effective countermeasures against deepfakes. Machine learning models that can analyze content for signs of manipulation are likely to become more prevalent. Innovations in watermarking and digital identification techniques may also emerge, enabling an easy verification process for users. As detection methods improve, the ongoing cat-and-mouse game between creators and detectors will continue to evolve, with each side striving to outpace the other in the context of cyber threats.

The societal impacts of these advancements will be profound. While deepfake technology can be used for creative expression and entertainment, the darker applications raise concerns about trust and authenticity in communication. As the ease of creating false media increases, issues related to fake news and personal privacy will become even more pressing. In scenarios such as election misinformation or defamation, the stakes will be higher than ever, compelling governments, organizations, and individuals to adopt a more proactive stance towards understanding and managing the implications of deepfake technology.

In conclusion, the future of deepfake technology is ripe with potential improvements in creation and detection capabilities, but it also presents significant challenges as society grapples with the ramifications of these technological advancements.

Conclusion: Navigating the Dark Side of AI

In recent years, artificial intelligence has transformed various sectors, yet its application within the realm of deepfakes has raised significant concerns about cyber threats. The manipulation of video and audio content through sophisticated AI algorithms poses risks not only to individuals but also to organizations and even national security. The ability to create realistic deepfake media erodes trust in visual information and complicates efforts for verification, creating an environment where misinformation can flourish.

As demonstrated, the consequences of deepfake technology extend far beyond mere entertainment; they can influence elections, incite violence, or damage reputations. This alarming trend highlights the urgency for increased awareness, as individuals and organizations must remain vigilant against the potential of AI-generated deception. The integration of regulations specific to the creation and distribution of deepfakes is imperative. Governments and regulatory bodies must establish frameworks to address the complexities surrounding the ethical use of AI while safeguarding against its misuse.

Furthermore, proactive measures are needed from both technology developers and users to combat the harmful implications of deepfakes. The development of advanced detection tools and educational programs can empower individuals in recognizing manipulated media. Encouraging ongoing dialogue surrounding the ethical ramifications of AI can foster a more informed society, better equipped to navigate the challenges posed by these advancements.

In conclusion, addressing the dark side of AI requires a collective effort from all sectors. By fostering collaboration among technologists, legislators, and the public, we can cultivate a more secure digital landscape, counter the threats posed by deepfakes, and ensure that the positive aspects of AI are harnessed responsibly and ethically. Engaging in continuous discussions about these issues will be crucial as we strive to navigate the complexities introduced by AI technologies.